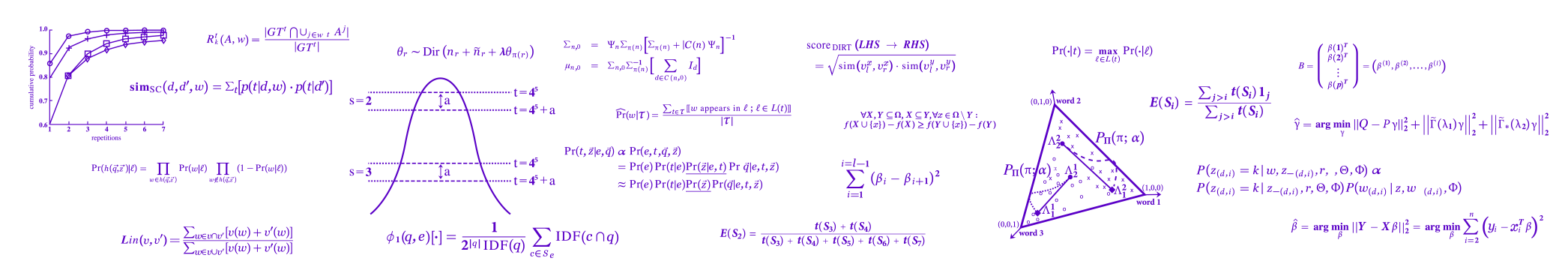

Decomposition Based Reparametrization for Efficient Estimation of Sparse Gaussian Conditional Random Fields

Simultaneously estimating multi-output regression model, while recovering dependency structure among variables, from high-dimensional observations is an interesting and useful exercise in contemporary statistical learning applications. A prominent approach is to fit a Sparse Gaussian Conditional Random Field by optimizing regularized maximum likelihood objective, where the sparsity is induced by imposing L1 norm on the entries of a precision and transformation matrix. We studied how reparametrization of the original problem may lead to more efficient estimation procedures. Particularly, instead of representing problem through precision matrix, we used its Cholesky factor, which attractive properties allowed inexpensive coordinate descent based optimization algorithm, that is highly parallelizable.